| Course ID | CS3210 |

| AY Taken | AY23/24 Sem 1 |

| Taught by | Cristina Carbunaru, Sriram Sami |

Parallel Computing: CS3210 Review

Tl;dr:

CS3210, under Prof. Cristina Carbunaru, is a solid introduction to parallel systems with a focus on theoretical understanding. The course covers various parallel computing models, architectures, and basics. While the lectures may seem basic for those with prior knowledge, the tutorials and labs offer valuable hands-on experience. The assignments are practical and enhance learning, though they can be time-consuming. The quizzes and tests are well-designed and fair. Overall, a worthwhile course if you have the bandwidth and interest in parallel computing.

Who should take this

- Students with little to no background in parallel computing: This course assumes no prior knowledge and starts from the basics, making it ideal for beginners.

- Those with some experience in low-level CS: If you've taken courses like CS2106 or have worked with embedded systems, you'll find the material accessible and manageable.

- Students looking for practical experience: The labs and assignments provide hands-on learning that's valuable for understanding and applying parallel computing concepts.

- Learners who value structured guidance: Prof. Cristina and the TAs are highly supportive, ensuring students have the help they need to succeed.

- Not for those with extensive parallel computing knowledge: If you've already covered similar content in courses like CS4224 or have significant experience, this course might not add much value.

Stats

There will be bias in the stats as I've previously worked in C++ development during the research on my internship and have self-learned various parallel programming constructs whilst doing that. I'm also somewhat knowledgeable on low-level parts of CS as I used to work with embedded systems, have taken CS2106, and took this course while teaching CS2100 (means lots of revision related to 3210). That said, here is the statistics with * after the stats to indicate where I might be extremely biased:

| METRIC | SCORE |

|---|---|

| Workload for understanding the contents (incl. lecture) | 3* |

| Workload for the labs (assuming you allocate a week for each lab) | 4* |

| Workload for the pair assignments (assuming you allocate a week for each assignment) | 7 |

| Workload for tut prep | 2 |

| Workload for finals prep (assuming a week of prep) | 7 |

| Technical depth of course (contents taught) | 5* |

| Technical depth of exams | 7 |

| Lectures' usefulness wrt. exams | 5 |

What this course is about and Prof. Cristina Carbunaru

Cristina cares about her students. I do not say that lightly. The whole course is mainly a theoretical introduction to parallel systems and it is ran under the assumption that you don't know anything about Parallel Computing. This is in fact, not the case as people who have taken CS2106, CS3223, CS3211, or any CS courses that introduced these topics in one way or the other. Due to these two factors, you might find the theoretical part of the course (e.g., lectures) extremely boring most of the time: Cristina assumes you don't know anything about the topic and thus explained everything in a layman terms (since she's a very nice person and tries to make everybody understand), but that takes up time that could otherwise be used to explain things from a more interesting perspective to people already familiar with the topic.

To give you an idea, the course spent some time explaining different kinds of parallelism in the following order:

1. State the definition of a type of parallelism (let's say, Data Parallelism)

2. Explain what it is

3. Explain why SIMD computers/instructions are designed to exploit data parallelism

4. Give a code example

By this point of the semester, however, most of the students were not yet familiar with the use of any other kind of computing devices other than the fact that they've heard of it in the previous lecture. The reason why this becomes so dry is thus apparent: Definitions are straightforward, and students could grasp them with some reflection. As a result, the 5-10 minute discussions on GPGPU added little to no new information.

This may be subjective, but I encourage you to review the source material or lectures yourself. Some students enjoy the lectures more than others. That aside, I would argue that coming from a practical example or experience (even trivial ones), then formulating different perspectives to see the problem and thus coming up with the definition of data and task parallelism is way more satisfying and give the students more valuable information.

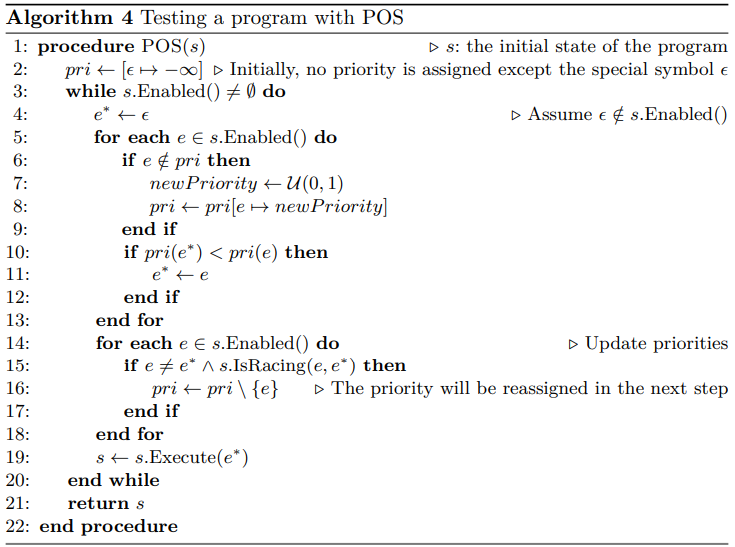

Ultimately, this course touches parallel computing from different constructs/perspectives simultaneously:

1. Parallel Computing Architecture

2. Parallel Computing Model

3. Parallel Computing Basics (not explicitly said, I made this terms up to describe subtopics often discussed within the two above that explains more-general terms)

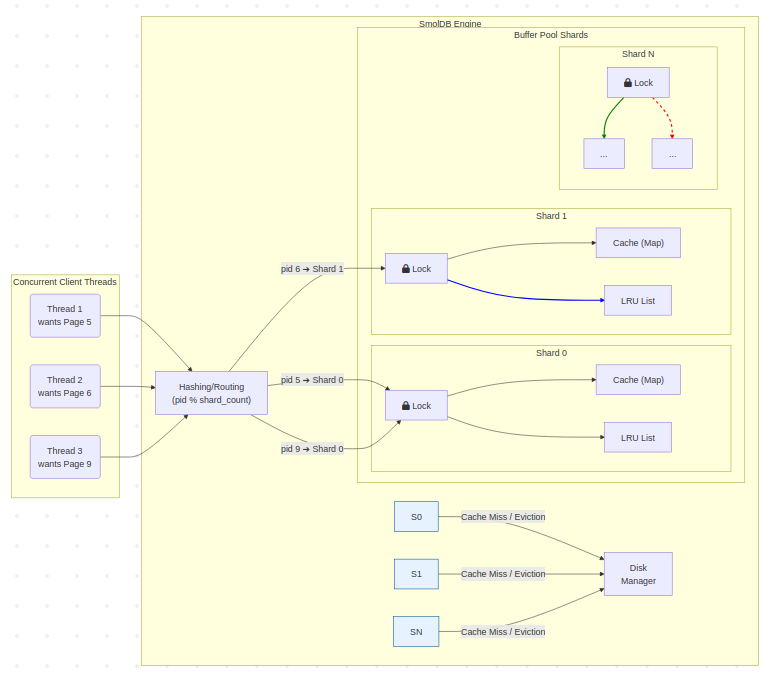

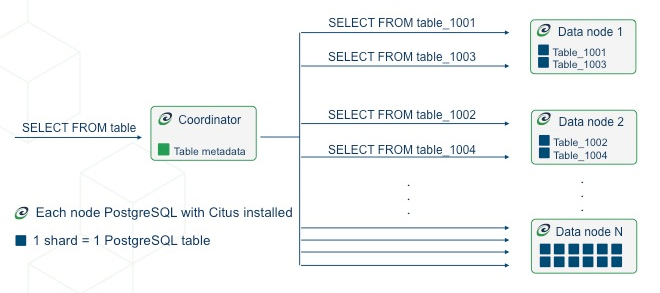

On PCA, you will learn about processor and memory organization, consistency models and problems, and network topologies with the problems they're trying to solve. Most of these are theoretical and made up a huge chunk of the tests. On PCM, you will learn about how we practically exploit data parallel model, distributed memory model, and shared memory models in our parallel computing systems. These are the interesting practical bits that I think are the most valuable topics to learn. Most of PCM discussion is in the labs, tutorials, and in the assignments.

Tutorials and Sriram

Let's just first agree that nobody has yet to exceed Sriram in teaching capability; not even me (this is me being unserious in case you start to think that I'm being an asshole). Teaching is not just about making sure your students understand the topics, but more importantly crafting the right structure of the topic themselves and making sure your students understand why the topic is structured that way.

These super interactive tutorial sessions explore the topics with a lot more depth and give you relevant valuable practical information for you to absorb. Attendance is mandatory but I'd come for free anyways so that doesn't matter. There are sheets given and you are encouraged to read them, but you are probably not encouraged to work on them beforehand as the discussion in the class covers that.

Labs

Since the TA for the tutorials are the same for labs, no need to further elaborate why the lab sessions are still very helpful as Sriram would still explain interesting related topics throughout the lab. You are not expected to finish the labs within the labs itself; me personally, I usually finished half -if not more, part of the lab assignment during the lab sessions themselves.

The biweekly lab assignments themselves are structured really well: I'd be surprised if anyone is lost in understanding the topics given the smoothness of the steps given in the paper and the amount of help available (Prof Cristina and all the super-dedicated TAs ready to answer you ANYTIME in the discussion forums, emails, and telegram group). Experienced students may spend 2-3 hours weekly on labs, while beginners might need 4-5 hours. Proper time management will reduce stress.

Assignments

Assignments are done in pairs. Only one of them is time consuming (hard) while the others are rather light. When I say light, expect spending around 5-8 hours each person to finish the assignment. When I say hard, expect more than 12 hours. The 3 assignments are on the following topics:

1. OpenMP (shared memory model)

2. GPGPU (CUDA) programming (data parallel model)

3. OpenMPI (distributed memory model)

The key is really to not overthink and just come up with the simplest solution available. Remember: Premature optimization is the root of all evil. For OpenMP and OpenMPI, you'll spend most of the time on the reports -this is also the case for CUDA programming if you're lucky to not have any bug. Otherwise, expect to spend a lot of time debugging your CUDA code which is extremely hellish. Here's a tip I wish I knew earlier: Use your device's CUDA instead of SOC's the moment you found that your code is not working. Don't debug on SOC's compute cluster. I swear it's far more worth it and easy to debug on your own device.

Assignments are highly practical, providing valuable hands-on experience. They might not be heavily tested in exams, but mastering these topics now is much easier than learning them independently later, making this course a must-take.

Quizzes and Tests

Too lazy to review much here. They're just normal (normal as in good) quizzes and tests. Props to Prof Cristina to make "normal quizzes and tests" so high quality by testing only the right things and not testing random unrelated skills like most other Profs do. Let me tell you this: The tests will not disappoint your effort in trying to truly understand the theoretical parts of the course.

Conclusion

I spent roughly 130 hours ((5 labs × 5h) + (5 tuts × 2h) + (13 lecs × 2h) + (25h assignments) + (4 quizzes and prep × 5h) + (1 exam × 25h)) on this course not including the time I spent dillydallying in between these activities. I think this is a fairly okay course in terms of workload but I'm well aware that others might disagree.

If you still have bandwidth to take this course, take it. If you think the material is too dry from attending the lectures, don't worry about it and don't drop the course. If you're a year 3 or year 4 and has already been exposed to distributed and/or parallel computing (say, from CS4224 or other similar courses), I don't think this course will provide much value for you as they would have already given you the theoretical parts better than CS3210 will and the skills to learn the topics through easier means than what CS3210 provides.