Improving Software Testability with Math

Problem

Even when requirements are clear, verifying software correctness and ensuring our code works in all scenarios without affecting existing logic can be challenging.

This is more apparent on ‘hot’ codes: Excerpts of code that got updated often due to its status as a new base logic or due to the amount of support tickets raised over time. As the code grows within itself, we end up with bugs that are extremely hard to fix -not just because we don’t know how to fix them, but mainly because we don’t know where exactly it went wrong.

I propose the following guidelines for high-growth codebases in organizations lacking sufficient manpower or funds for a dedicated QA team.

Intro to Verification

To test excerpts of a code, we usually have unit tests. E.g. if we have the function requirement

we can test for and to ’show’ that our implementation of works as expected.

What we did just now is testing two inputs of different equivalence classes using a black box method, meaning we expect two different behaviour on different sets of inputs (different equivalence class) and we test it without knowing how the function is actually implemented by the programmer (black box).

However, how do we know that the programmer has exactly implemented the way it should be? How do we know that, in this example, if we test for , won’t output say 50 + 200?

While it’s easy to say “Well, just look at the actual implementation and verify it manually”, this problem is harder to solve when the function is as simple as our function. Functions/methods get complicated easily (in one of my previous workplaces, we had set a guideline of the threshold for cognitive complexity but it is somehow uncommon to find methods that is 20-30x the maximum complexity).

I try to pinpoint the main questions people ask when I complain about code not being testable, and I hope by the end of this article we'll have answered all the questions and have a more concrete grasp of testability:

- What do we look for when we verify something manually?

- How do we verify the correctness of a piece of code?

- Is it possible to systematize this process and rely less on the amount of experience someone has?

- When does a logic or process becomes hard to verify manually? Is there a threshold for it?

We first look at the strictest way we can formally verify the correctness of a software.

What even is Formal Verification?

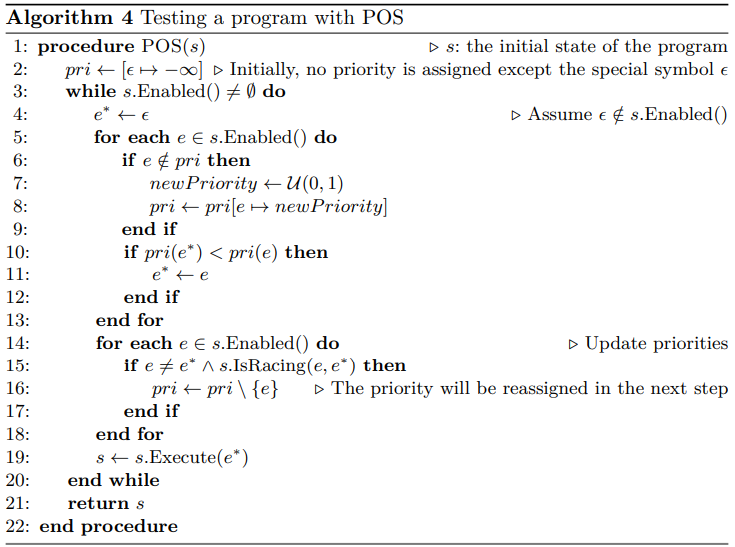

Formal testing is a generic field in Computer Science and SWE that seeks not just cases where a particular logic fails, but also look for every single possible failure cases. There are a lot of ways to explain this, but essentially, while normal testing is searching for an absence of correctness, formal testing is instead looking for absence of failure -regardless of whoever wrote the code and how complex it is.

One example of this is deductive verification via SAT/SMT solvers. If you have a pure function, you can try doing this by stating all your assumptions and expected results in statements like x is a integer greater than 50, or this block of code where we increment x by one only occurs when the input y is greater than 100, etc. In the end, you can convert this into a series of logical clauses that would resemble the input to a Boolean-SAT problem. Once you arrange them, you'll get something in the form of:

(clause1) (clause2) (clause3) (clauseN)

Which can then be verified using any available SAT solver.

As you may have guessed, actual formal verification-driven development is expensive to setup as it involves a lot of system design requirements and verification; this is why it’s only used for correctness-critical systems (e.g., distributed databases, flight control system, medical devices). That aside, though, achieving a cheap knockoff version of this method is relatively easy and cheap enough that the benefit far outweighs the cost.

While in the end we still need to mathematically verify such logic either through Boolean logic or through normal algo proof, we can practice semi-formal testing-oriented development which seeks to ease us the process of doing these proofs with a basic discrete math skills we all have in our arsenal. Similar to a a fit body, we can follow healthy living pattern and exercises in our daily lives even though we’re not necessarily elite athletes.

Semi-formal Testing-Oriented Development

This is a term I made up just now which emphasizes how approximating a formal testing development as a culture can greatly help us work in somehow-correctness-critical systems. Here is how we can practice this:

Proof sketches on code

We all know how to do basic discrete math and how to prove basic algorithms. In fact, if you don't agree with that statement, you should probably stop developing anything and don't come back ever before I bonk you.

An easy way to prove correctness or to simply convince other people that your code is correct while making it easy for them to verify your proof is to tie your proof sketch to your code. A great example of this is how PostgreSQL does this in the following: https://github.com/postgres/postgres/tree/master/src/backend/optimizer

You can see how they incorporate a README in each of their system to explain what it does, how it works, and what properties it takes. You might think that we don't have time for this and you're probably right; but we can at least practice their way of tying proof to their code: https://github.com/postgres/postgres/blob/master/src/backend/optimizer/prep/prepagg.c

Notice the following on line 288:

c... /* * Check whether partial aggregation is feasible, unless we * already found out that we can't do it. */ if (!root->hasNonPartialAggs) { /* * If there is no combine function, then partial aggregation * is not possible. */ if (!OidIsValid(transinfo->combinefn_oid)) root->hasNonPartialAggs = true; /* * If we have any aggs with transtype INTERNAL then we must * check whether they have serialization/deserialization * functions; if not, we can't serialize partial-aggregation * results. */ else if (transinfo->aggtranstype == INTERNALOID) { ...

We can see how they incorporate proof sketches inside the code itself. This is not necessarily formal, but it is easy for people to verify the sentence "If there is no combine function, then partial aggregation is not possible." or its contrapositive "If partial aggregation is possible, then there has to be a combine function." either through unit tests or through manual verification. In short, these help you with two things:

- Verifying the correctness of the sentence/assumption/proof sketch itself, and

- Verifying the correctness of the code with respect to the sentence.

Practicing this without bloating your code with comment is an art you should master.

Principle before logic

There is a good reason why some very common SWE principles are very common and are abided to by all the most monumental pieces of codebases there are: It’s not to make the code looks beautiful (even though it is), but because it is mandatory. Unlike code style, the measure of maintainability, scalability, and extendibility are not abstract subjective concepts, but rather a result of the amalgamation of said basic principles.

SWE principles serves as a guiding heuristic which ensures that regardless of your ability to properly write the logic of a process, it is still going to be easy to verify. Designing software or codebases with the aim of enforcing SWE principles, thus, should be prioritized over implementing a working logic.

Example: When a project requires an MVP to be implemented, it is tempting to break principles and abstractions under the banner of "we want to write this fast". I once worked with this sort of team and it is hellish as we know for a fact that if the project proposal is accepted, we'll have to scale and extend the MVP; nobody has the time to rewrite from scratch the MVP we spent three weeks to build, so developers end up working on a shitty foundation for years.

Before you start complaining, let me remind you: no, designing high quality MVP does not mean you do it slow. In fact, here is a response you can quote me on:

If being a principled engineer slows your team progress down, then your problem is that you got a major skill issue on your developers and your team.

Black box before white box

A code that passes black box verification, say, by unit test, should give enough working confidence that it works within what the black box is testing. If the code passes black box test on equivalence class , we can be assured that the possibility of any error occurring in is extremely small -if not zero.

Moreover, most developers are pretty busy and it's humane to expect them skipping reviews of seemingly 'simple' logic. Having a proper unit testing culture helps to minimize error stemming from such mistake.

There should be a whole article discussing unit testing culture, but here's some brief:

Obligating Proper Unit Test

When working in ShopBack, we were forced to have 75% code test coverage or else the code won't deploy. Although it's frustrating that a lot of developers simply write wide-range "unit tests" simply for the coverage and performing all sort of dirty trick to achieve that, I believe this is a step towards the best unit test culture. Here's how we can elaborate on that:

- Make sure that the team is clear on what a unit test is and how to write them. Unit tests should be unitary in what they test, dependency-free within the test process, and clear in which equivalence classes are they testing for.

- Set a threshold bypass for coverage: There may be cases where, within a tight schedule, testing all equivalence classes is not necessary or that the logic is so simple that no test is even needed at all. In such cases, it might be wise to relax the coverage requirement only for these MRs -with the approver's permission. There might also be cases where proof sketches were made on README-s but the tests have yet to be written completely. Setting a bypass for coverage threshold may help here and the rest of the task can be written as tech debts.

- Reject MRs with improper unit test: A unit test can either be needed, not needed, or delayed (debt); anything beyond that is a waste of resource. By forcing this culture upon the developers, they would naturally try to write codes that are suitable for easy unit testing.

Example: When a developer writes a function that violates DID or SRP for the sake of tight schedule, he/she would soon notice that that function is hard to test. The mandatory proper unit testing serves as a counterbalance here:

- Violating DID makes it extremely hard to replace the dependency with a stub, making the unit test not dependency-free.

- Violating SRP increases the number of equivalence classes exponentially, which makes it tedious to unitarily test each one of them.

Assertion of Presumptions

Instead of saying

This function works as long as the input is correct.

We reduce the possibility of error and the load of verification to the function by defensively asserting all the assumptions in the beginning:

textfunction f(x,y,z) { Assert x fulfills AssumptionA(x) Assert y fulfills AssumptionB(y) Assert z fulfills AssumptionA(z) and AssumptionC(z) ... rest of the function }

This is a cheap and easy way to ease future debugging activities: we ensure that if we found any bug concerning this function, we know the mistake is in the logic and not its input.

Can we replace this with unit test?

Not really. While unit testing may test subsets of equivalence classes, it does not (and not supposed to) test for faulty input. Moreover, if we consider faulty input as a valid input that has its own equivalence class (e.g., we want the function to log an error to the DB upon facing faulty input), we would need to write the test for ALL the classes generated by this new partitioning rule which is too inconvenient.

Foreword

While this article doesn't cover all practices relating to approximating a somewhat-formal testing-oriented development, I hope it serves enough as a brief of how to improve testability and how we cannot write correct, high-quality code without basic proving skills.